Testing GTX970 vs CPU

Converting PDFs of old books into Epubs

I posted earlier on how to create a decent Epub from a scanned PDF of an old book: see here.

The conversion is done with a Nvidia GTX 970. A ten year old GPU with only 4 GB of Vram. Interestingly, it was even the source of some controversy as there was a split in the memory, making it for games a GPU with 3,5 GB ram at full speed with 512 MB at a lower speed. Given the limited memory, I created (with the vibing help of ChatGPT) a bash framework that first splits the file in smaller PDFs, then runs the software on the smaller files to finally combine them again.

This works, however there is downside that where the files are combined again there is a gap with a line in between. As the result is a Markdown file this can be cleaned up, but that takes some effort. Especially as I chose to not do this automated.

I could choose to not use a GPU and run on CPU only as I have plenty of system Ram. One book is still to much for the amount of Ram I have, but in stead of dividing in pdfs of 4 pages I could probably use pdfs of 50 pages. That would mean a lot less manual cleanup. Obviously though the conversion would take a lot longer, but as I don’t have to babysit this process it might make sense. So I did some tests.

The tests

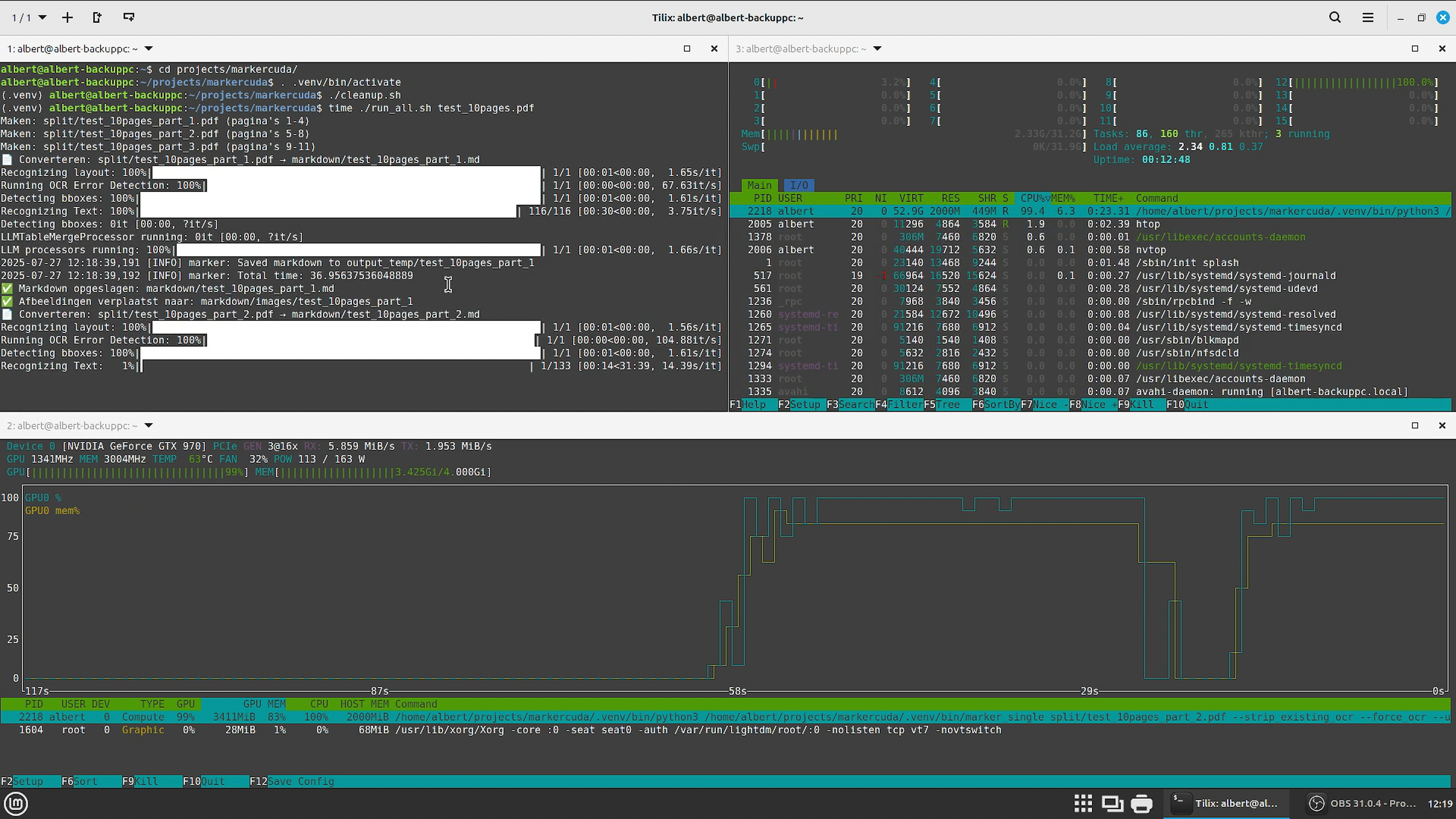

Now I did not run a large set of tests. Also I chose to run this on a PDF of 10 pages with one image in it. It divided the pdf in files containting 4 pages. And here are the results:

| Testrun | # pages | Batchsize | watt total | Real | User | sys | Remarks |

|---|---|---|---|---|---|---|---|

| GTX 970 | 10 | 4 pages | 180 W | 2m15s | 2m20s | 0m11s | 1 image in PDF |

| Ryzen 7 2700X | 10 | 4 pages | 125 W | 9m57s | 70m26s | 2m57s | 1 image in PDF |

note that the wattages are for the whole system, not just the GPU

The GPU took a bit more power. However it was remarkably faster, even though this is on GPU. This trusty old GPU was 4 times faster.

Conclusion

Despite the extra cleanup, it saves a lot of time to use even such an old GPU.

Hardware choices

I tried larger PDF files, but larger than 4 pages kept failing which could also be seen in nvtop as it needed more memory. Roughly speaking, it seems to take 1 GB of Vram for each page. Pytorch according to what I read also works fine on AMD GPUs. AMD GPUs with more memory are more affordable than Nvidia. Intels CPUs are even more afforable, however it is unclear to me how well they are supported with Pytorch which is required for the conversion. It seems that the best bang for the buck right now is to get an AMD RX7600XT with 16 GB. For a 200 page PDF that would mean 12-13 manual corrections versus 50 with the 4 GB GTX.

If you really want to get serious..Hardware with loads of ‘unified memory’ like Apples M3 or M4 systems or systems based on Ryzen AI Max+ seem very interesting. The latter being a lot more affordable and you can run Linux on them. Apparently a 64 GB version is already available for € 1499,- As it is unified, you can’t get the full 64 GB, but 48 should be doable. A 128 GB version is coming as well.